After 5 Years I'm Trying To Write Sound Synthesis Programs Again

There's a story behind all this.

It was originally just a tuning theory learning trip

One day for some reasons I was looking for microtonal music online. The first time I listened to anything microtonal I was like "this is fine but weirdly wrong", but at that day I found a visualization video of Easley Blackwood's 15-TET etude and was immediately hooked. The whole thing is very exotic, the initial chords have a very uncanny feel (especially when they gradually gets louder in the first half), and the second half lifts the whole mood into a major-but-really-not-quite area. It's unlike anything I've heard, and it's really cool.

From what I've read online, 15-TET, unlike 19-TET and 22-TET [1], really isn't that close to the 12-TET system everybody's using in terms of musical languages, and that's probably why it sounds so jarring[2]; and then I listened to his 17-TET etude - it just sounds like it's a soundtrack for a game! If I can somehow mix different tuning systems into "normal" 12-TET music and have them work together nicely, then this would work like MSG; it's like putting in quintuplet swing, bringing in a subtle but different feeling for the listeners, making stuff that would otherwise be boring more interesting (for some people at least). It's always nice when you have more tools that you can use. So how can I use it and exactly what the heck is 5-limit? A lot of strange theory. Sure, I'll learn more theory, I'll learn all of 'em...

Basically:

- Human ear hears small whole number frequency ratio as (relatively) consonant. The smallest ratio is 2/1, which is what we called an octave. This is the most consonant, so consonant that most of the time we just consider two notes with a ratio of 2/1 the same note but "in the higher/lower octave".

- A system made up of whole number ratios is called a just intonation system.

- 12-TET system is not a just intonation system, because it's of the ratio

2^(n/12) / 1, which cannot be written as any small whole number ratio; so when compared with 12-TET, a 12-tone JI would sound more "consonant" or "in-tune".- The reason we use 12-TET nowadays instead of a JI is because:

- The existence of comma. Comma arises when you have more than one way to approach to what supposed to be the same note but ended up with different frequencies. This is a problem because it means we cannot just stack ratios indefinitely upwards. There are lots of commas, one of the most famous is the syntonic comma. This problem cannot be solved without compromising the "purity" of intervals because the ratios can never line up. (or you can choose to live with the problems with extra caution.)

- To fix this problem (i.e. "temper" the comma, hence the word "temperament") people came up with something called meantone temperament (which is NOT 12-TET), but meantone temperament has its own problems as well (e.g. the wolf intervals).

- The supposed-to-be-same intervals are not the same. e.g. when using the so-called Ptolemy's intense diatonic scale, while both supposed to be a perfect fifth, the distance between D-A and E-B is respectively 680 and 702 cents (a cent is 1/100 of a 12-TET semitone), that means you can't transpose a piece in one key into another key just by "moving the notes", because it'll sound different.

12-TET solves the comma problem, the wolf interval problem and the transpose problem by not using any just intervals but okay-ish approximations to them with evenly spaced-out intervals. That's why sometimes you'll hear people say "12-TET is actually out-of-tune" or "in order to be in-tune (i.e. with no comma & terrible wolf intervals) you have to be out-of-tune (i.e. with no good just intervals)"

- There are two kinds of limit: odd limits and prime limits.

- Assume all intervals of a JI have the form of

a:b, the odd-limit of this JI would be the maximum odd number among allas andbs.- Assume all intervals of a JI have the form of

a:b, when all theas andbs are factorized using the fundamental theorem of arithmetic, the prime-limit of this JI would be the biggest prime number involved in the factorization.- Why there's no even-limit? Because you can always multiply or divide by 2 - it corresponds to moving by octaves.

- For example, Ptolemy's intense diatonic scale, a scale with intervals C(1/1), D(9/8), E(5/4), F(4/3), G(3/2), A(5/3), B(15/8) and C(2/1), its odd-limit would be 15 (from B's 15/8) and its prime-limit would be 5 (from E 5/4, A 5/3 and B [3*5]/8). The pythagorean tuning is a 3-prime-limit tuning system, but it has (when only considering the notes C~B with no accidentals) a odd-limit of 27.

Making music with microtonal systems... or not

Now it's not like people weren't able to produce instruments using other tuning systems, but almost all music notation softwares have basically no regards of systems that are not 12-TET. There's plugins for MuseScore that can do xenharmonic stuff, but they only tune notes, and they're too much of a hassle for newbies like me. It would be cool if I could somehow build a program that would do all this in a newbie-friendly straight-forward way.

Update 2022.4.25: Nope, turns out this is actually pretty intuitive and I just didn't learn enough.

Generating sounds

Sound is basically air vibrations, which is fundamentally analog; we sample an analog signal to produce digital samples, so that we can work with them inside digital computers; and in order to produce analog signal from digital samples, we use an analog-digital converter. Now, in order to generate sound with software, we have to:

- somehow represents an analog signal.

- sample the signal to get samples.

That's it; the ADC is already inside your computer's sound card (it wasn't the case decades ago; but nowadays you can pretty much just assume it is).

What kind of sampling is sufficient for our need? According to the Nyquist-Shannon sampling theorem, in order to correctly determine a signal with a highest frequency of fHz, we need to have a sampling rate of 2fHz. Human ears can hear sound between 20Hz to roughly 20kHz (when you grow older the higher end will be dropping to something like 16kHz~17kHz due to ears wearing out) so in order to sample any sound properly we need a sampling rate of at least 40kHz, the two most widely-used one is 44.1kHz and 48kHz. Sometimes you'll have to use lower sampling rate for reasons - technical restrictions (e.g. radio broadcasts), smaller file size, etc., but then the higher frequency info is going to lost. (Note that the bit rate in MP3 files is not the same as sampling rate due to MP3 is a compression algorithm.)

There's a Python library called wave distributed with the official distribution. Don't know why it got in there in the first place, but you can use to make .wav file.

A very basic demo, written in Python, is listed below:

import math

import wave

# default to 44.1kHz

SAMPLE_RATE = 44100

# this function maps any value in [-1,1] to integers in [-32768, 32767]

# PRE: v_pm1 \in [-1, 1]

def mapper_16bit_signed(v_pm1: float) -> int:

return (v_pm1 + 1) / 2 * 65535 - 32768

def sine(freq_hz: float, amp_01: float, phase: float):

def res(t_s: float):

return amp_01 * math.sin(2*math.pi*freq_hz*t_s+phase)

return res

# the sine wave formula is: y(t) = A*sin(2*PI*f*t + phi)

# where:

# t would be the time in seconds.

# A is the amplitude; y(t) will inside the interval of [-A, A].

# f is the frequency.

# phi is the initial angle in radians; 2*PI equals to 360 degree.

def sample(f, sample_rate_hz: int, time_s: float):

s = []

t = 0

sample_length = sample_rate_hz * time_s

i = 0

while i < sample_length:

ss = mapper_16bit_signed(f(t))

# the lower byte first - little endian.

s.append(bytes([ss&0xff, (ss&0xff00)>>8]))

# we increase `t` by 1/sample_rate_hz so when it reaches 1 it would be exactly `sample_rate_hz` samples.

t += 1/sample_rate_hz

i += 1

return b''.join(s)

# 440Hz: A

test_f = sine(440, 0.5, 0)

v = sample(test_f, SAMPLE_RATE, 1.5)

output_file = wave.open('test.wav', 'wb')

# a "sample width" of 2: 2-bytes, 16-bit

output_file.setsampwidth(2)

# generate mono wave file.

output_file.setnchannels(1)

output_file.setframerate(SAMPLE_RATE)

output_file.writeframes(v)

output_file.close()

Sometimes I don't understand the so-called "software engineers" in this country. I've done almost exactly the same thing as above 5 years ago, but when I wrote the blog post (which I've deleted a long time ago) for this I got called a stupid scamming crackpot by someone in one of those so-called "community" for not knowing wavelet transform. 4 years later this happened with no mention of any kind of "transform" anywhere, and when that post got posted in that "community", nobody complained a goddamn thing. I agree that I've done way too little compared to that blogpost and I definitely do not know how wavelet transform works or how that can be applied to what I want to do, but here's the thing: if something's really important for my intent, then I'm definitely willing to learn; and if I did something wrong or had the wrong idea, you could just tell me directly - what the hell is that crackpot accusation??

Calculate frequencies for equal temperaments

In a n-tone equal temperaments, one starts from the first note, multiplies a ratio and gets the second note, multiplies that ratio on the second note and get the third note, etc., until he gets to the nth note whose frequency is twice of the frequency of the first note; so for n-TET, the ratio r would need to satisfy the equation r^n = 2, from which we can derive r = 2^(1/n) (r has to be bigger than 1 for obvious reasons).

From that we can have this function:

def mk_tet(base: float, n: int):

res = []

for i in range(n):

res.append(math.pow(2, i/n) * base)

return res

This will return a list of frequencies which corresponds to n-TET starting from the frequency base.

We can construct the frequencies of A minor from here:

a_chromatic = mk_tet(440, 12)

a_chromatic_lower = mk_tet(220, 12)

a_minor = [

a_chromatic_lower[0], # A (lower octave)

a_chromatic_lower[2], # B (lower octave)

a_chromatic_lower[3], # C (lower octave)

a_chromatic_lower[5], # D (lower octave)

a_chromatic_lower[7], # E (lower octave)

a_chromatic_lower[8], # F (lower octave)

a_chromatic_lower[10], # G (lower octave)

a_chromatic[0], # A

a_chromatic[2], # B

a_chromatic[3], # C

a_chromatic[5], # D

a_chromatic[7], # E

a_chromatic[8], # F

a_chromatic[10], # G

]

Now I'll generate a little tune with it. Before that there's one more problem to solve: the length of each note is (in the most case) not measured in exact seconds on sheet music; we have to calculate it from the tempo marker and the note values. There are two sorts of common tempo marking: one kind only consist a description, like "Lento" (meaning "slow" in Italian, a slowtempo), "Allegro" (meaning "cheerful" in Italian, a fast tempo) and "Vivace" (meaning "lively" in Italian, a tempo faster than allegro); the other kind is of the form of "[a certain note value] = [a number]", meaning "a beat consists of a [the note value] note, and there's [the number] beats in a minute". A very common tempo is "quarter note = 120", which means with this tempo a quarter note is 60/120 = 0.5 seconds long, an eighth note 0.25 seconds, a half note 1 second, etc.

From all that we have the following code:

a_minor_sine = [sine(i, 0.5, 0) for i in a_minor]

# speed: quarter note = 120

sample_list = [

sample(a_minor_sine[7], 44100, 0.25),

sample(a_minor_sine[8], 44100, 0.25),

sample(a_minor_sine[9], 44100, 0.25),

sample(a_minor_sine[10], 44100, 0.25),

sample(a_minor_sine[8], 44100, 0.5),

sample(a_minor_sine[6], 44100, 0.25),

sample(a_minor_sine[7], 44100, 0.75),

]

output_file = wave.open('test.wav', 'wb')

output_file.setsampwidth(2)

output_file.setnchannels(1)

output_file.setframerate(44100)

for s in sample_list:

output_file.writeframes(s)

output_file.close()

One way to generate chords is to add the signals together. Note that different notes that rings together would require a cut on their amplitude, so that when added together it'll still have the same maximum amplitude; this will not affect the pitch, because pitch is determined by frequency:

a_chromatic = mk_tet(440, 12)

a_chromatic_lower = mk_tet(220, 12)

a_chromatic_lower2 = mk_tet(110, 12)

a_chromatic_higher = mk_tet(880, 12)

a_chromatic_higher2 = mk_tet(880*2, 12)

a_chromatic_sine = [sine(i, 0.1, 0) for i in (

a_chromatic_lower2 +

a_chromatic_lower +

a_chromatic +

a_chromatic_higher +

a_chromatic_higher2

)]

def mk_chord(f_list):

l = len(f_list)

def res(t_s: float):

return sum([i(t_s)/l for i in f_list])

return res

# Bmin7-E7-AM7-DM7

# speed: quarter note = 90

sample_list = [

sample(

# Bmin7(no5): B D (F#) A

mk_chord([

a_chromatic_sine[0*12+2], # B(lower)

a_chromatic_sine[1*12+2], # B

a_chromatic_sine[1*12+5], # D

a_chromatic_sine[1*12+12], # A

]),

44100,

60/90 * 2,

),

sample(

# E7(no5): E G# (B) D

mk_chord([

a_chromatic_sine[0*12+7], # E(lower)

a_chromatic_sine[1*12+7], # E

a_chromatic_sine[1*12+5], # D

a_chromatic_sine[1*12+11], # G#

]),

44100,

60/90 * 2,

),

sample(

# AM7(no5): A C# (E) G#

mk_chord([

a_chromatic_sine[0*12+0], # A(lower)

a_chromatic_sine[1*12+0], # A

a_chromatic_sine[1*12+4], # C#

a_chromatic_sine[1*12+11], # G#

]),

44100,

60/90 * 2,

),

sample(

# DM7(no5): D F# (A) C#

mk_chord([

a_chromatic_sine[0*12+5], # D(lower)

a_chromatic_sine[1*12+5], # D

a_chromatic_sine[1*12+4], # C#

a_chromatic_sine[1*12+9], # F#

]),

44100,

60/90 * 2,

),

]

output_file = wave.open('test.wav', 'wb')

output_file.setsampwidth(2)

output_file.setnchannels(1)

output_file.setframerate(44100)

for s in sample_list:

output_file.writeframes(s)

output_file.close()

Refactoring

Then again, the code above is not a good way to model signals & sampling & stuff. There's a few reasons to this. I was trying to implement ADSR envelope while writing this blog post, and I've met quite a few problems. An ADSR envelope is defined by the following parameters:

- Attack: the time it takes for the volume to get to the maximum after the key is pressed.

- Delay: the time it takes for the volume to drop to the sustain level after hitting the maximum.

- Sustain: the level the volume need to stay at when the key is still hold

- Release: the time the volume went from sustain level to 0 after the key is released

There are other parameters as well in some synthesizers and digital audio workstations, but these are the most basic; notice the pressed and the released, that means instead of being a parameter for the function sample, time_s is controlled by something else. You might say this is controlled by note value, we can model it using a sequence of notes, something like this:

@dataclass

class Note:

name: str

freq: float

value: float # 1/2 for half note, 1/4 for quarter note, etc.

# ...

def sample(f, note_list: list[Note]):

# ...

some_adsr = ADSR(**some_adsr_params)

for note in note_list:

f(some_adsr, note)

But no, that's not a good idea, because:

- Rather than a single sequence, music can be a lot of sequences; a lot of stuff can happen at the same time in a piece of music, and that has been the norm for a very very long time.

- Notes in a music does not always line up perfectly. Western classical music often uses a technique called a "suspension", which means some notes are arranged (by the composer) to be kept on for a longer period than its peers. Gospel organ[3] often uses this technique to connect one big[4] chord to the next big chord.

- Note themselves does not always fit perfectly into the idea of "note values". Human playings are not always perfect as if they're fully quantized to a fixed grid, sometimes they do it because they're not good enough players, sometimes they do it because it's a part of the style, etc.... that's why MIDI-to-sheet-music conversion will almost always be flawed.

- In MIDI, a note is defined not by a pitch and a note value, but rather than a pitch, a "press" (called "note on") event and a "release" (called "note off") event.

A better idea is to follow MIDI:

- We have a series of "channels" or "voices" that can be used to play notes. General MIDI Level 1 specification requires to support playing notes simultaneously on at least 24 channels. We don't have to have exactly 24 channels but we can make use of this abstraction here.

- On these channels we have a sequence of "note on" and "note off" events.

And we do something like this:

# ...

res = []

channel_list_len = len(channel_list)

t = 0

i = 0

while any((not chnl.no_more_event()) for chnl in channel_list):

s = 0

for chnl in channel_list:

e = chnl.event[0]

if e.time <= t:

chnl.handle_event(e)

chnl.event.remove(e)

# NOTE: this part uses the same principle of sampling chords

s += (1/channel_list_len) * sample_channel_current_value(chnl, t)

res.push(mapper_16bit_signed(s))

t += 1/sample_rate_hz

# ...

...or at least that's the way I'm gonna do. There probably are better ways, I just can't think of any right now.

Now, how do we design the type of chnl? We can have the following idea:

- A channel is assigned with a certain generator.

- For obvious reasons we want each channel to have its own ADSR envelope instead of using only one envelope on all channel.

- And we want channels to be able to synthesize multiple notes at the same time.

some_adsr, of course, would have the basic parameters of a ADSR envelope.

From all that we now have the new version:

import enum

import wave

import math

import dataclasses

from dataclasses import dataclass

from typing import Callable

# PRE: v_pm1 \in [-1, 1]

def mapper_16bit_signed(v_pm1: float) -> int:

return (v_pm1 + 1) / 2 * 65535 - 32768

@enum.unique

class ChannelEventKind(enum.Enum):

NOTE_ON = 1

NOTE_OFF = 2

@dataclass

class ChannelEvent:

kind: ChannelEventKind

abs_t: float

freq: float

@dataclass

class ADSR(Callable):

attack_ms: float

decay_ms: float

sustain_01: float

release_ms: float

def __call__(self, e: ChannelEvent, dt: float, raw: float):

if e.kind is ChannelEventKind.NOTE_ON:

# attack stage: scale between [0, 1]

if dt < self.attack_ms/1000:

return raw*(dt/(self.attack_ms/1000))

# decay stage: scale between [1, self.adsr.sustain_01]

elif dt - self.attack_ms/1000 < self.decay_ms/1000:

dt = dt - self.attack_ms/1000

a = 1 - dt / (self.decay_ms/1000)

b = a * (1-self.sustain_01) + self.sustain_01

return raw * b

# sustain stage

else:

return raw * self.sustain_01

elif e.kind is ChannelEventKind.NOTE_OFF:

# release stage: scale between [self.adsr.sustain_01, 0]

if dt < self.release_ms/1000:

a = 1 - dt / (self.release_ms/1000)

return raw * a * self.sustain_01

else:

return None

@enum.unique

class ChannelState(enum.Enum):

ON = 1

OFF = 2

def sine(freq_hz: float, time_s: float):

return math.sin(2*math.pi*freq_hz*time_s)

@dataclass

class Channel:

adsr: ADSR

osc: Callable[[float, float], float] = sine

current_sounding_freq: dict = dataclasses.field(default_factory=dict)

current_amp_01: float = 1

def sample(self, abs_t_s: float) -> float:

# NOTE: the reason why i do this will be explained later.

current_sounding_freq_len = len([i for i in self.current_sounding_freq.values() if i.kind is ChannelEventKind.NOTE_ON])

# current_sounding_freq_len = len(self.current_sounding_freq)

if current_sounding_freq_len <= 0: return 0

raw_note_factor = 1/current_sounding_freq_len

raw_res = 0

delete_freq = []

for i in self.current_sounding_freq:

e = self.current_sounding_freq[i]

dt = abs_t_s - e.abs_t

this_raw = self.osc(i, abs_t_s)

adsr_res = self.adsr(e, dt, this_raw)

if adsr_res is None:

delete_freq.append(i)

else:

raw_res += raw_note_factor * adsr_res

for i in delete_freq:

del self.current_sounding_freq[i]

return raw_res * self.current_amp_01

def handle_event(self, e: ChannelEvent):

self.current_sounding_freq[e.freq] = e

def synthesize(sample_rate_hz: int, channel_list: list[Channel], channel_event_list: list[list[ChannelEvent]]):

res = []

channel_list_len = len(channel_list)

t = 0

i = 0

channel_event_list_copy = [i[::1] for i in channel_event_list]

while any(channel_event_list_copy):

s = 0

for i in range(min(len(channel_event_list_copy), len(channel_list))):

chnl = channel_list[i]

evl = channel_event_list_copy[i]

# NOTE: it would be nice if you can push a value into a list from the *left*

# hand side like `Array.prototype.unshift` in JavaScript. we don't have that

# in Python, so I used the right hand side instead. the event list in the

# demo section is reversed accordingly.

e = evl[-1]

while evl and e.abs_t <= t:

chnl.handle_event(e)

evl.pop()

if not evl: break

e = evl[-1]

s += (1/channel_list_len)*chnl.sample(t)

rs = int(mapper_16bit_signed(s))

res.append(rs&0xff)

res.append((rs&0xff00)>>8)

t += 1/sample_rate_hz

return bytes(res)

def mk_tet(base: float, n: int):

res = []

for i in range(n):

res.append(math.pow(2, i/n) * base)

return res

a_chromatic = (

mk_tet(110, 12)

+ mk_tet(220, 12)

+ mk_tet(440, 12)

+ mk_tet(880, 12)

+ mk_tet(880*2, 12)

)

# demo section.

DEFAULT_ADSR = ADSR(attack_ms=200, decay_ms=100, sustain_01=0.8, release_ms=300)

CHANNEL_LIST = [

Channel(adsr=DEFAULT_ADSR, current_amp_01=0.7),

]

CHANNEL_EVENT_LIST = [

list(reversed([

ChannelEvent(ChannelEventKind.NOTE_ON, 0, a_chromatic[0*12+2]),

ChannelEvent(ChannelEventKind.NOTE_ON, 0, a_chromatic[1*12+2]),

ChannelEvent(ChannelEventKind.NOTE_ON, 0, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_ON, 0, a_chromatic[1*12+12]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2, a_chromatic[0*12+2]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2, a_chromatic[1*12+2]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2, a_chromatic[1*12+12]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2, a_chromatic[0*12+7]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2, a_chromatic[1*12+7]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2, a_chromatic[1*12+11]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*2, a_chromatic[0*12+7]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*2, a_chromatic[1*12+7]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*2, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*2, a_chromatic[1*12+11]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*2, a_chromatic[0*12+0]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*2, a_chromatic[1*12+0]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*2, a_chromatic[1*12+4]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*2, a_chromatic[1*12+11]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*3, a_chromatic[0*12+0]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*3, a_chromatic[1*12+0]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*3, a_chromatic[1*12+4]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*3, a_chromatic[1*12+11]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*3, a_chromatic[0*12+5]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*3, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*3, a_chromatic[1*12+4]),

ChannelEvent(ChannelEventKind.NOTE_ON, 60/90*2*3, a_chromatic[1*12+9]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*4, a_chromatic[0*12+5]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*4, a_chromatic[1*12+5]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*4, a_chromatic[1*12+4]),

ChannelEvent(ChannelEventKind.NOTE_OFF, 60/90*2*4, a_chromatic[1*12+9]),

])),

]

output_file = wave.open('test.wav', 'wb')

output_file.setsampwidth(2)

output_file.setnchannels(1)

output_file.setframerate(44100)

s = synthesize(44100, CHANNEL_LIST, CHANNEL_EVENT_LIST)

output_file.writeframes(s)

output_file.close()

A more sophisticated envelope

I would like to add two parameters to the basic ADSR:

- Hold: the time to keep at maximum amplitude after the attack stage.

- Sustain Time: the maximum time to hold at the sustain level. After the sustain time has passed, the envelope will enter release stage even if there's no NOTE_OFF event.

When the hold time is 0 and sustain time is positive infinity, it works in the same way as the basic ADSR envelope.

@dataclass

class ADSR(Callable):

attack_ms: float

decay_ms: float

sustain_01: float

release_ms: float

hold_ms: float = 0

sustain_ms: float = float('inf')

def __call__(self, e: ChannelEvent, dt: float, raw: float):

attack_s = self.attack_ms/1000

hold_s = self.hold_ms/1000

decay_s = self.decay_ms/1000

if e.kind is ChannelEventKind.NOTE_ON:

# attack stage: scale between [0, 1]

if dt < attack_s:

return raw*(dt/attack_s)

# hold stage: keep at 1

elif dt - attack_s < hold_s:

return raw

# decay stage: scale between [1, self.adsr.sustain_01]

elif dt - attack_s - hold_s < decay_s:

dt = dt - attack_s - hold_s

a = 1 - dt / decay_s

b = a * (1-self.sustain_01) + self.sustain_01

return raw * b

# sustain stage

else:

dt = dt - attack_s - hold_s - decay_s

if dt < self.sustain_ms/1000:

return raw * self.sustain_01

else:

# release stage: scale between [self.sustain_01, 0]

dt = dt - self.sustain_ms/1000

if dt < self.release_ms/1000:

a = 1 - dt / (self.release_ms/1000)

return raw * a * self.sustain_01

else:

return None

elif e.kind is ChannelEventKind.NOTE_OFF:

# release stage: scale between [self.adsr.sustain_01, 0]

if dt < self.release_ms/1000:

a = 1 - dt / (self.release_ms/1000)

return raw * a * self.sustain_01

else:

return None

We need to modify the class Channel as well: when a note is already "done playing", the NOTE_OFF message should have no effect.

# in class Channel

# ...

def handle_event(self, e: ChannelEvent):

if (e.kind is ChannelEventKind.NOTE_OFF

and self.current_sounding_freq.get(e.freq) is None):

return

self.current_sounding_freq[e.freq] = e

Other kinds of oscillators

Currently we only have sine wave, which is pretty boring. We'll add a few more:

import math

import random

def square(freq_hz: float, time_s: float):

cycle = time_s/(1/freq_hz)

cycle_off = cycle - math.floor(cycle)

return -1 if cycle_off >= 0.5 else 1

def triangle(freq_hz: float, time_s: float):

cycle = time_s*freq_hz

cycle_off = cycle - math.floor(cycle)

return (

# [0, 0.25] map to [0, 1]

cycle_off*4 if cycle_off <= 0.25

# [0.25, 0.75] map to [1, -1]

# -0.5: [-0.25, 0.25]

# neg: [0.25, -0.25]

# *4: [1, -1]

else (cycle_off-0.5)*-4 if cycle_off <= 0.75

# [0.75, 1] map to [-1, 0]

# 1-: [0.25, 0]

# neg: [-0.25, 0]

# *4: [-1, 0]

else (1-cycle_off)*-4

)

def sawtooth_rising(freq_hz: float, time_s: float):

cycle = time_s*freq_hz

cycle_off = cycle - math.floor(cycle)

return cycle_off*2-1

def sawtooth_falling(freq_hz: float, time_s: float):

cycle = time_s*freq_hz

cycle_off = cycle - math.floor(cycle)

return (1-cycle_off)*2-1

def noise(freq_hz: float, time_s: float):

# NOTE: we don't use `freq_hz` or `time_s` here because it's just noise

return random.random()*2-1

Combining the voices, revisited

In the code above I wrote:

class Channel:

# ...

def sample(self, abs_t_s: float) -> float:

# NOTE: the reason why i do this will be explained later.

current_sounding_freq_len = len([i for i in self.current_sounding_freq.values() if i.kind is ChannelEventKind.NOTE_ON])

# current_sounding_freq_len = len(self.current_sounding_freq)

I was adding and testing out new kinds of oscillators when this happened. replace the code above with this:

# ...

def sample(self, abs_t_s: float) -> float:

# current_sounding_freq_len = len([i for i in self.current_sounding_freq.values() if i.kind is ChannelEventKind.NOTE_ON])

current_sounding_freq_len = len(self.current_sounding_freq)

...and change the channel's oscillator to square wave:

CHANNEL_LIST = [

Channel(adsr=DEFAULT_ADSR, osc=square, current_amp_01=0.7),

]

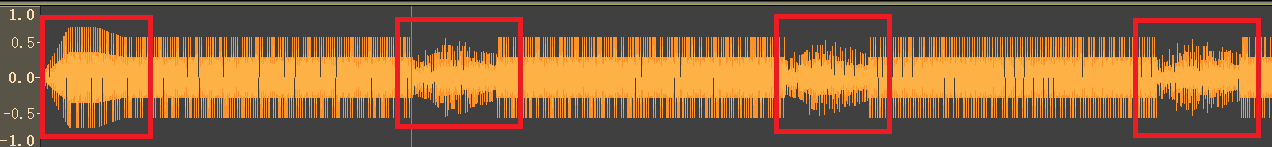

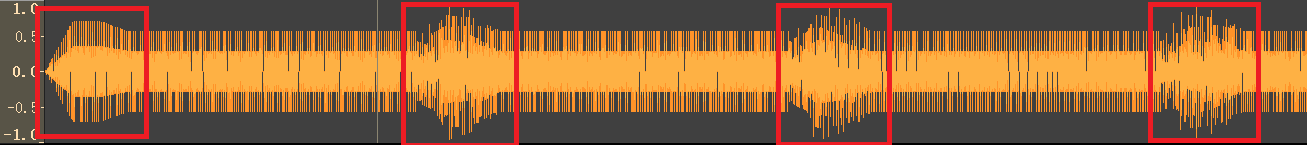

The generated result, when opened in Audacity[5], looks like this:

You can see among the four red rectangles I draw, the wave in the last 3 does not have a correct shape; with the envelope setup, it should look like the one in the first rectangle.

The problem, I think, is in the way I handle sampling multiple voices at once. In the code of sample, I decided that raw_note_factor should have the value of 1/len(self.current_sounding_freq), which adds too much weight to the voices that's already released and adds too little weight to the voices that's still pressed. The idea I use to correct this is to first disregard the voices that's already released and only adds their value as an addendum afterwards (the code above only removes the notes after their release stage ended; those voices which is already NOTE_OFF but still in the release stage would still remain in self.current_sounding_freq). This is probably not the correct way, but at least it's more natural now:

Demo code

The code for this blog post can be found here. I'll add the "front-end" for all this later...